Study: Compact neural networks as natural simulators of chaotic dynamics

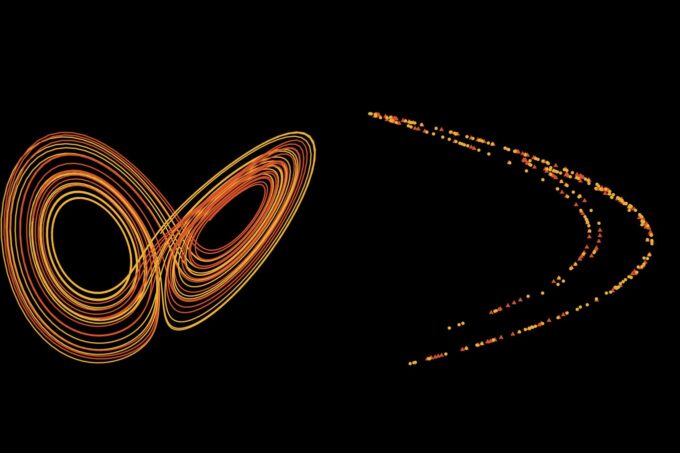

Tiny Neural networks using very little data for training learn to stretch, turn, flip, and fold vectors to mimic chaotic Lorenz attractor and Henon map dynamics for indefinitely long periods of time. Image credit: Ziwei Li

Climate processes, irregular heartbeats, long-term stock market forecasts, the flow of smoke plumes in the air, some planetary orbits, a double-rod pendulum, and bipedal walking robot simulations — these are only a smattering of the examples of complex, chaotic systems that pervade our natural and engineered environments that researchers would like to understand and make predictions about into the future. For this, researchers are considering the potential of neural networks, an algorithmic machine with computational neurons and connections inspired by the human brain, and capable of recognizing underlying relationships and patterns in data.

Theoretically, with enough time, scientists could characterize these dynamical systems with a rich set of deterministic equations. However, an intrinsic property that they all share is that small differences in initial conditions grow rapidly as time goes on — from a tiny bit of variability between starting conditions to diverging trajectories or very different observations. (Imagine leaves swirling in an ocean eddy and their evolving circulation paths.) A classic example of chaos in a system is a Lorenz attractor, or what’s better known as the two-lobed “butterfly effect” image, which shows how small differences can have big downstream effects. When using a set of 3 simple equations to model the Earth’s dry atmospheric convection, the late MIT professor Edward Lorenz and his colleagues noticed that the trajectories for close data points diverged, and the probability for being in a particular state of the numerical system could be the same as another very different condition. This phenomenon explained why, for instance, we have a good idea of what Earth’s climate conditions will be like in the near future (the big picture), but the accuracy of weather forecasts plummets after about two weeks, even if we know what the weather conditions are today.

Chaotic “non-linear” behavior like this has rendered scientists’ understanding of long-term complex system dynamics and predictions next to impossible, but advances in computation provide hope. Lately, researchers have wondered: could a neural network do this prediction and emulation work for us? Moreover, could a neural network “learn” to quickly reveal the patterns that emerge in real-world systems or their complex digital twins, without being excessively complicated, and if so, how? “We want appropriate network models for chaotic dynamics that also include a grounded-in-physics explanation of their skill,” says Sai Ravela, a principal research scientist in MIT’s Department of Earth, Atmospheric and Planetary Sciences (EAPS).

Now, research published in IEEE Transactions on Neural Networks and Learning Systems by Ravela and lead author EAPS graduate student Ziwei Li suggests that this may be possible with compact neural networks, as well as the ability to decompose and investigate how the system evolved this capacity. By watching a neural network emulate a simple chaotic system from a geometric perspective, Li and Ravela reveal that chaos emerges in a series of linear and non-linear steps producing topological mixing—a series of mathematical stretches, rotations, and folds of the input data within the neural network, much like making hand-pulled noodles or pretzels. This understanding means that researchers could train neural networks to efficiently mimic the chaos found in larger systems. This would allow scientists to study long-term behavior and uncertainties faster. Additionally, engineers could better predict and correct disordered behaviors needed for the viability of systems like autonomous robots and self-driving cars, interacting within the real world.

Building chaos

“A big problem with real-world processes is that they or their models are often non-linear and chaotic. As engineered systems get more complex, we need to have some easy, quick ways of inferring long-term behavior — enter the neural network,” says Ravela.

Neural networks contain a series of input and output layers with nodes or “neurons”, indicating the starting conditions of a system and its “solutions” or what the network predicts the system will look like at a later point in time. Sandwiched between these layers is one or more hidden layers, creating an interconnected set of algorithms. Researchers feed observations into the machine, and it attempts to interpret relationships between data points, and produce a reasonable answer.

However, how the machine arrives at those conclusions is poorly understood, making them a “black box.” Additionally, an issue that Ravela and Li point out is over-engineering. Researchers can already build intricate neural networks, which have been trained to take an observation and produce the “right” answer in baby steps. But this doesn’t help scientists explain long-term system behavior in a series of equations, if neural networks are overfitted, especially when the mathematical transformations to explain their behavior needs to be based in physics. Ravela further says, “if neural networks cannot reproduce chaos like their real-world counterparts and their digital twins, how can we trust them to reliably render patterns of behavior far into the future?”

Unpacking the underlying issue

So, Li and Ravela asked if and how a simple neural network could reproduce, extrapolate, and emulate the behaviors of well-known, chaotic systems of similar, low-dimensionality (a Lorenz attractor and a Hénon map), when given limited training data from them. (Both of these cases are represented by 3 or 2 simple equations, respectively; and likewise, models of them resemble a two-lobed butterfly and a boomerang.)

First, the researchers examined a compact neural network’s ability to interpolate within the training data range. To do this, Ravela and Li regarded the numerical solutions from the Lorenz and Hénon map equations as truthful observations one might measure in a real-world system. The solutions comprise trajectories (location pairs of points) from around the full attractor structure, which hint at how one data point flows to the next. A few neural networks, containing 3-8 neurons in a single hidden layer, were trained on a random selection of these Lorenz attractor location pairs (20 up to 150).

When the researchers ran the neural network forward from these trajectories, they found that a small number of data and neurons suffice to “learn” the Lorenz system’s dynamics, but this couldn’t be done with strictly linear functions. Using the smallest viable neural network for this problem (4 hidden neurons evaluating 3 equations) trained with 40 data points confirmed their result and produced a two-lobed structure with a close resemblance with the ground truth, the “perfect” Lorenz attractor.

Li and Ravela took the analysis a step further by comparing the predictability of the Lorenz system trajectories with those of the neural network, side by side. With the same setup, the researchers trained the machine on only 40 trajectories from the Lorenz attractor and selected 2000 starting positions that paired with nearly cousins on the neural network’s model. Ravela and Li watched and measured the rate at which the trajectories diverged at certain timesteps, which turned out to be nearly the same. “This means that the neural network itself is as chaotic or as predictable as the Lorenz system,” says Li. “So, it is doing all the desired behavior,” without the issue of overfitting. Additionally, they showed a 5-neuron machine a small set of data from one side of the Lorenz attractor, and the neural network produced trajectories that bifurcated—it extrapolated the other lobe of the Lorenz attractor structure, which was outside the training information.

“Generalization doesn’t seem to work well with limited training data, extrapolation even less so,” says Ravela, “but our finding is a complete counterpoint: even though the equations are non-linear and chaotic and very little training data in a small region was used, it turns out that the overall structure matches remarkably well.” In other words, “we couldn’t give you the exact weather forecast, but we might be able to describe the important patterns over longer periods, much faster.” He’s quick to point out that they are dealing with an abridged, chaotic system that has its noise scrubbed out, which most systems lack, but this idealized set-up is sufficient to argue for the efficacy of the neural networks on learning chaotic behaviors.

A smooth transition inside a black box

A characteristic of chaos, Li raises, is that the perturbations in chaotic systems don’t grow indefinitely large; repetition and iterations of similar conditions and states develop throughout the system’s flow. From a geometric perspective, Ravela and Li postulated that for this to happen, the trajectories or vectors within the system would need to undergo a series of rotation, stretching (a linear operation), rotation, and compression or folding (non-linear behavior). “A random system could sprinkle data points in no apparent order filling up an unbounded volume,” says Ravela. “But a dynamical system’s order looks like a low-dimensional object, for example a curve that twists, turns and loops within a bounded volume.” So, considering it in a geometric way provided the researchers with a natural insight into how the neural network is mimicking chaotic behaviors.

To illustrate this, Li and Ravela observed a lower-complexity system: the iterations of a 2-neuron network trained on a Hénon map, which exists in 2-D space; think x-y coordinates. This way they could decompose the types of functions that the neural network applied to form the boomerang structure. Lo and behold, the folding behaviors emerged, and the structure mapped back onto itself, much like the process of making pretzels or hand-pulled noodles. Ravela says this process is indicative of topological mixing, a classic way of creating chaotic behavior. What’s neat, Ravela adds, is that the governing equations from the Hénon map and Lorenz attractor predict the minimum number of neurons needed. So, they confirmed overfitting didn’t occur and the machine operated efficiently.

“This isn’t really an argument about what the neural network learns, it is an argument about what the neural network is doing once it learns, which reveals something about how neural networks work,” says Li.

Li and Ravela see these findings as a promising step: a neural network with reasonable complexity would be a good candidate to represent many chaotic dynamics, such as filling in broken paleoclimate records or providing stochastic model predictive control for engineered systems like robots. Most importantly, Ravela says, “perhaps you can just use your neural network to predict stock trends or climate change, because we know it emulates chaotic dynamics so well without explicitly knowing the governing equations.”